LynxScribe - Build Smarter Gen AI Applications, Faster

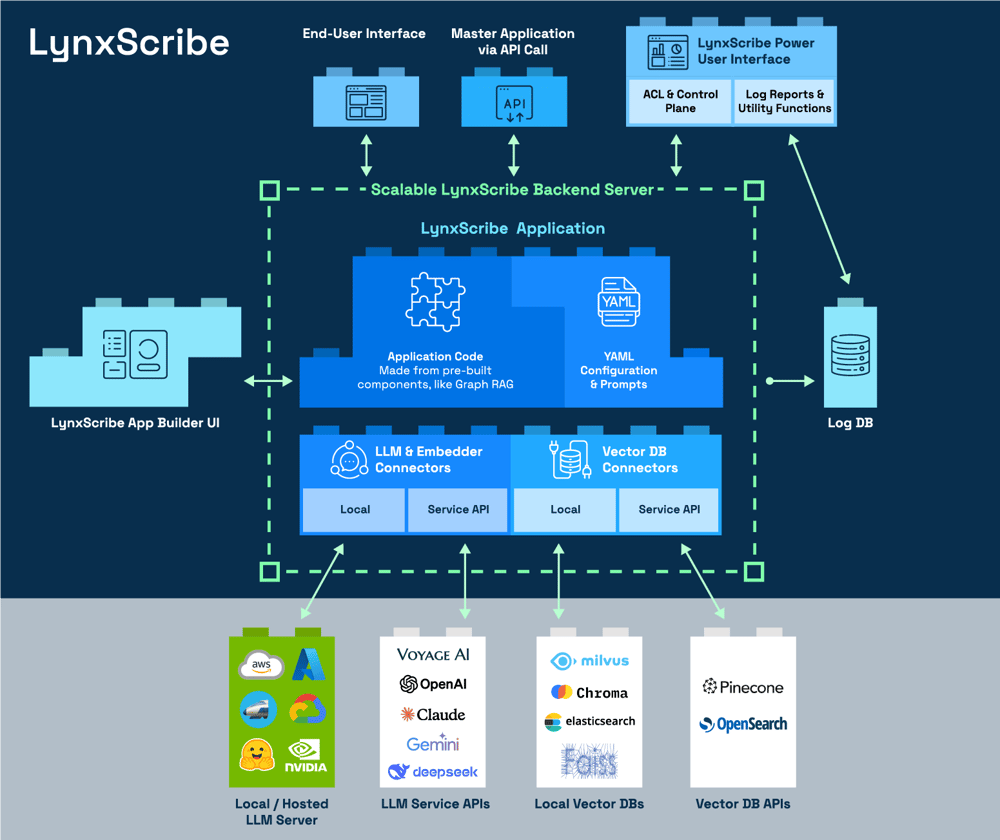

LynxScribe is our internal development toolkit designed to rapidly prototype, test, and deploy Generative AI applications. It includes a suite of modular, reusable components that integrate seamlessly with leading LLMs, service APIs, and vector databases — enabling our team to deliver value in hours, not weeks.

LynxScribe

Delivering Benefits to Our Customers

Accelerated Project Timeline: Build and deploy custom Generative AI applications in hours, not weeks.

Cost Efficiency: Reuse components and optimize usage across hosted and API-based models.

Better Accuracy: Combine LLMs with Graph AI for more relevant and reliable results.

Examples of Applications Enabled By LynxScribe

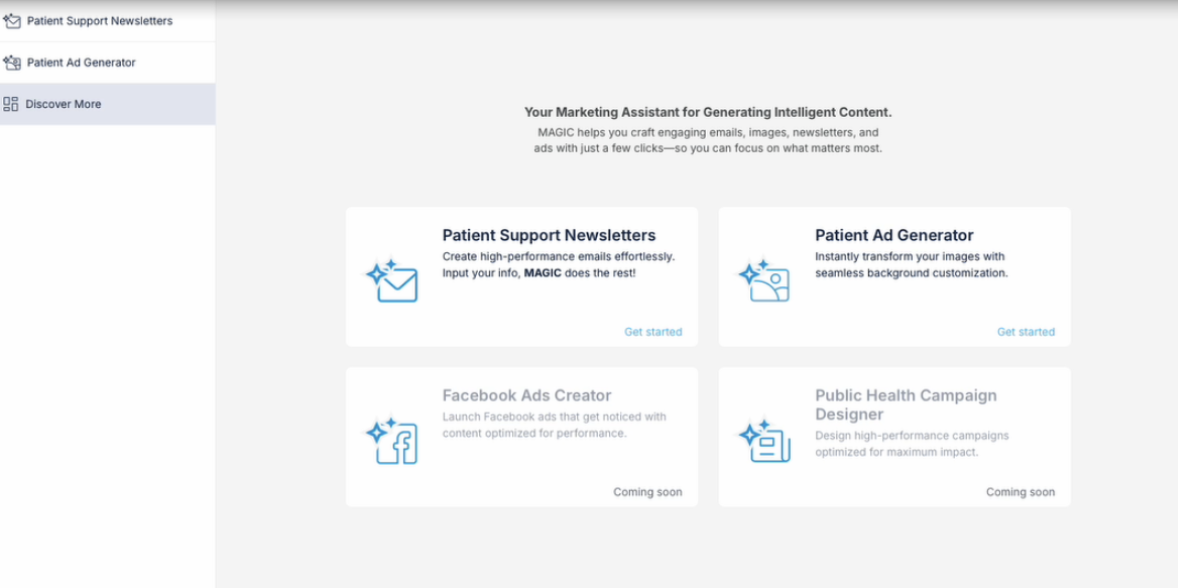

Content Generator

for Marketing Campaigns

Create content quickly for different media channels such as email, social media, and print ads.

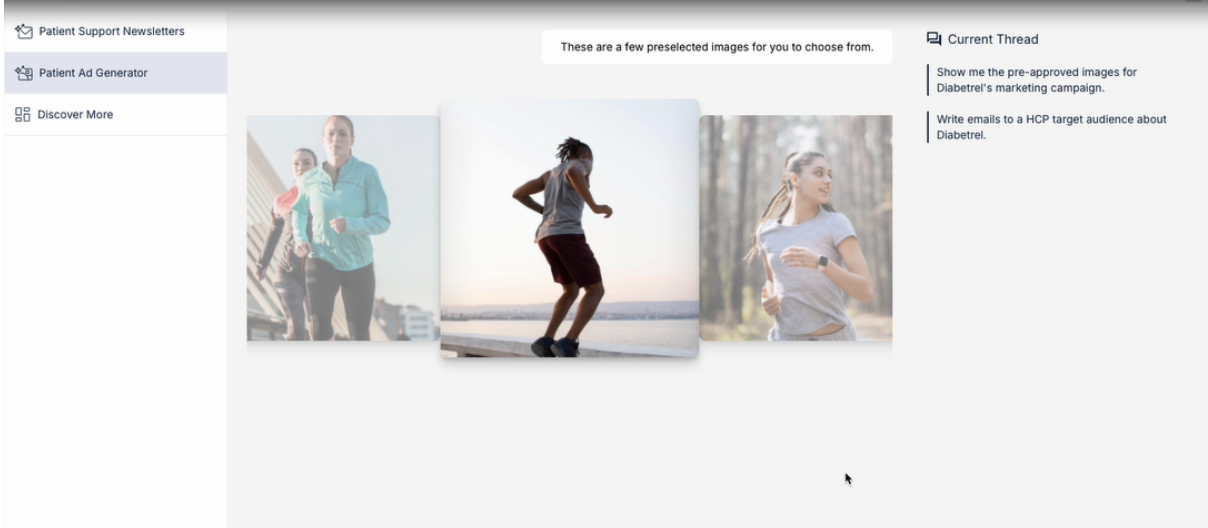

Image Generator

for Marketing Campaigns

Blend images and text to create high-quality content that automatically complies with regulations and company guidelines.

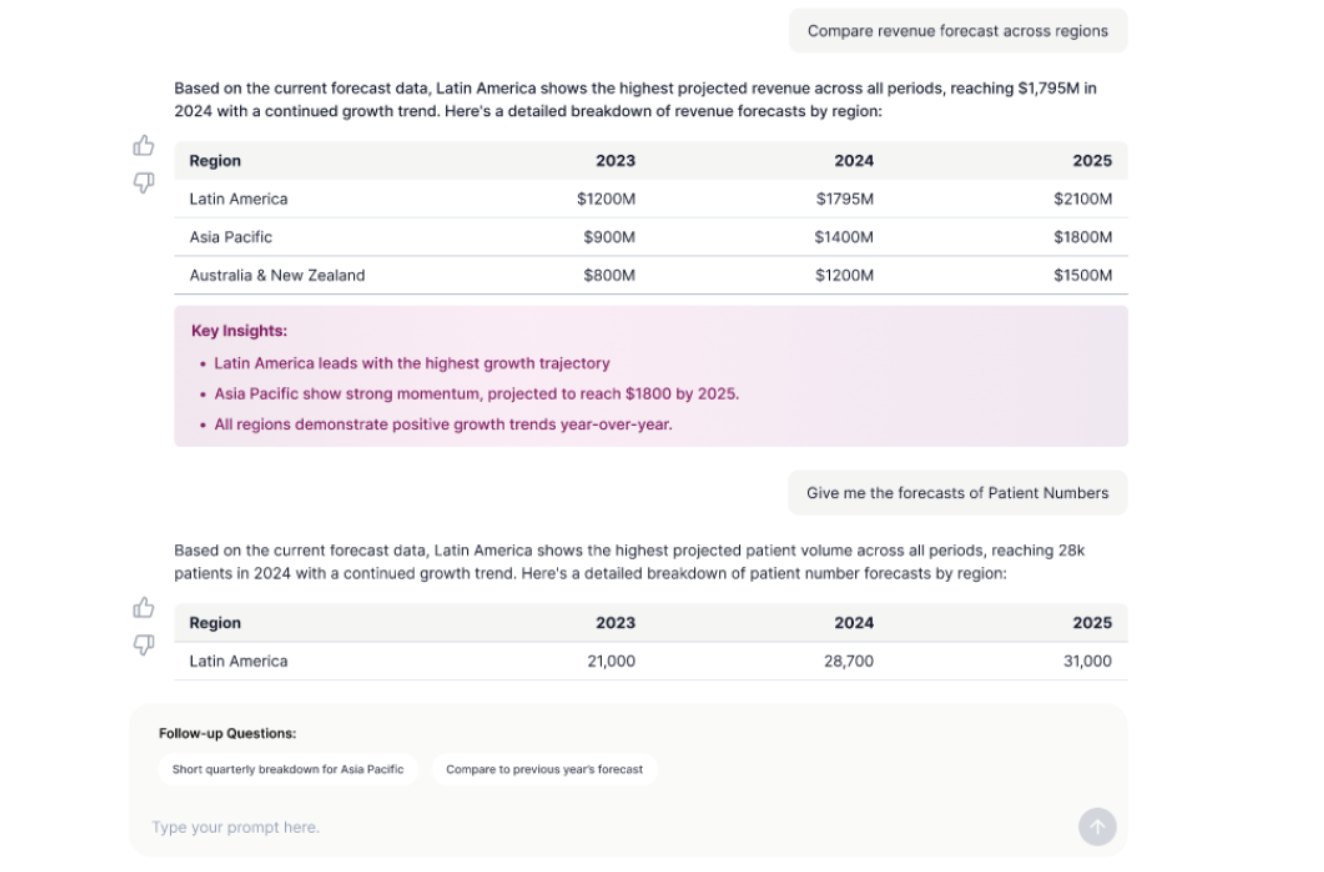

Interactive

Analytics

Interrogate your data in a dialog format using natural language; generate visualizations and get automatic summaries and recommendations for further analysis.

Specialized S

Chatbots & Co-Pilots

Obtain fact-checked responses from compliance-vetted knowledge bases, with business rules acting as guardrails.

Modular Components: The Building Blocks of Innovation

LynxScribe building blocks are model-agnostic, compatible with any foundational model or vector database provider, ensuring they adapt to evolving needs. Designed to be assembled and configured, they enable rapid customization to meet specific customer use cases and technology environments.

LynxScribe Modular Components

LynxScribe’s modular components make solution delivery flexible and efficient. Each component is designed to perform a specific function, such as:

RAG Graphs: Integrate graph-based data with Retrieval-Augmented Generation for more accurate and context-aware results.

Cluster Algorithms: Explore and visualize user conversations, logs, or social interactions with embedding-independent clustering.

Embedders and Re-Rankers: Customize how data is embedded and ranked to improve retrieval precision across different use cases.

Audio Transcribers and Processors: Convert speech to text with high accuracy — including support for languages like Mandarin and Cantonese — using intermediate phonetic processing.

Simple Task Execution: Run lightweight NLP tasks like text generation, translation, classification, or search using simple, configurable prompts.

LynxScribe offers a flexible and efficient environment, enabling Lynx Analytics AI engineers to prototype and experiment with concepts directly in Jupyter notebooks or their own code. Once an application is refined and tailored to the customer's needs, it can be easily deployed on major cloud platforms such as Azure, AWS, or GCP.

LynxScribe’s cross-platform compatibility ensures that engineers can migrate applications effortlessly — whether moving between cloud providers or switching from OpenAI models to open-source alternatives like Llama 3 on NVIDIA GPU clusters — with minimal configuration changes. This flexibility also extends to seamless integration with other enterprise applications and tech stacks.

LynxScribe supports accelerated development cycles by allowing engineers to use it as an API service or to directly copy and embed components within their own codebases for rapid customization. This enables fast prototyping, iteration, and deployment.

For even greater ease of use, LynxKite 2000:MM will offer a visual interface to manage workflows, lowering the barrier for developers and non-technical users alike. With model-agnostic architecture and compatibility with most vector stores, the platform enables adaptability across infrastructures, models, and data systems — making it future-proof and enterprise-ready.

Unlike conventional RAG systems that rely solely on embedding similarity, LynxScribe enhances retrieval precision by integrating graph-based reasoning.

Our Graph RAG pipeline transforms structured and unstructured data — such as documents, code, or knowledge bases — into an ontology graph. By identifying key terms and named entities, we construct meaningful connections between concepts. We then enrich this graph with synthetic questions and train edge weights using actual chat history.

This results in a smarter knowledge base that retrieves not only the most relevant text chunks but also their contextual relationships. The outcome: dramatically improved accuracy, deeper insights, and more precise, explainable responses.

Our Technology Partners